CENTOS 下 Neo4j 图形数据库安装及集群搭建

Neo4j安装

服务器准备

本次准备3个节点

| 名称 | IP | 系统 | CPU | 内存 | 磁盘 |

|---|---|---|---|---|---|

| node01 | 10.186.63.108 | centos7.6 | 4 | 8G | 60G |

| node02 | 10.186.63.112 | centos7.6 | 4 | 8G | 60G |

| node03 | 10.186.63.114 | centos7.6 | 4 | 8G | 60G |

系统环境准备

修改允许打开最大文件数,编辑文件/etc/security/limits.conf,添加以下内容

1 2 3 4 5 6 |

root soft nofile 60000 root hard nofile 60000 neo4j soft nofile 60000 neo4j hard nofile 60000 panos soft nofile 60000 panos hard nofile 60000 |

编辑文件/etc/pam.d/su,添加以下内容

1

|

session required pam_limits.so

|

重启服务器

1

|

reboot

|

JDK环境

解压

1 2 |

tar -zxvf jdk-8u221-linux-x64.tar.gz mv jdk1.8.0_161 /data/jdk1.8 |

修改环境变量

1

|

vi /etc/profile

|

添加以下内容

1 2 3 4 |

#set java environment $PATH export PATH export JAVA_HOME=/data/jdk1.8 |

使添加内容生效

1

|

source /etc/profile

|

查看java版本

1 2 3 4 5 6 |

# java -version Java(TM) SE Runtime Environment (build 1.8.0_221-b11) Java HotSpot(TM) 64-Bit Server VM (build 25.221-b11, mixed mode) # which java /data/jdk1.8/bin/java |

修改java文件

1 2 3 4 |

cd /data/jdk1.8/jre/lib/management cp jmxremote.password.template jmxremote.password chmod 700 jmxremote.password vim jmxremote.password |

以下两行内容取消注释

1 2 |

monitorRole QED controlRole R&D |

最后对文件重新授权

1

|

chmod 0400 jmxremote.password

|

安装Neo4j

1 2 |

tar -zxvf neo4j-enterprise-3.5.5-unix.tar.gz mv neo4j-enterprise-3.5.5 /data/neo4j |

修改环境变量

1

|

vi /etc/profile

|

添加以下内容

1 2 3 4 |

#set neo4j environment NEO4J_HOME=/data/neo4j $NEO4J_HOME/bin export NEO4J_HOME PATH |

使添加内容生效

1

|

source /etc/profile

|

高可用集群搭建

node01节点配置如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

dbms.directories.import=import false true dbms.connector.bolt.listen_address=10.186.63.108:7687 true dbms.connector.http.listen_address=10.186.63.108:7474 true dbms.jvm.additional=-XX:+UseG1GC dbms.jvm.additional=-XX:-OmitStackTraceInFastThrow dbms.jvm.additional=-XX:+AlwaysPreTouch dbms.jvm.additional=-XX:+UnlockExperimentalVMOptions dbms.jvm.additional=-XX:+TrustFinalNonStaticFields dbms.jvm.additional=-XX:+DisableExplicitGC dbms.jvm.additional=-Djdk.tls.ephemeralDHKeySize=2048 true dbms.windows_service_name=neo4j dbms.jvm.additional=-Dunsupported.dbms.udc.source=tarball dbms.connectors.default_listen_address=10.186.63.108 dbms.connectors.default_advertised_address=10.186.63.108 dbms.mode=HA ha.server_id=108 ha.initial_hosts=10.186.63.108:5001,10.186.63.112:5001,10.186.63.114:5001 ha.host.data=10.186.63.108:6001 ha.join_timeout=30 dbms.security.procedures.unrestricted=algo.*,apoc.* dbms.jvm.additional=-Dcom.sun.management.jmxremote.port=3637 |

node02节点配置如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

dbms.directories.import=import false true dbms.connector.bolt.listen_address=10.186.63.112:7687 true dbms.connector.http.listen_address=10.186.63.112:7474 true dbms.jvm.additional=-XX:+UseG1GC dbms.jvm.additional=-XX:-OmitStackTraceInFastThrow dbms.jvm.additional=-XX:+AlwaysPreTouch dbms.jvm.additional=-XX:+UnlockExperimentalVMOptions dbms.jvm.additional=-XX:+TrustFinalNonStaticFields dbms.jvm.additional=-XX:+DisableExplicitGC dbms.jvm.additional=-Djdk.tls.ephemeralDHKeySize=2048 true dbms.windows_service_name=neo4j dbms.jvm.additional=-Dunsupported.dbms.udc.source=tarball dbms.connectors.default_listen_address=10.186.63.112 dbms.connectors.default_advertised_address=10.186.63.112 dbms.mode=HA ha.server_id=112 ha.initial_hosts=10.186.63.108:5001,10.186.63.112:5001,10.186.63.114:5001 ha.host.data=10.186.63.112:6001 ha.join_timeout=30 dbms.security.procedures.unrestricted=algo.*,apoc.* dbms.jvm.additional=-Dcom.sun.management.jmxremote.port=3637 |

node03节点配置如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

dbms.directories.import=import false true dbms.connector.bolt.listen_address=10.186.63.114:7687 true dbms.connector.http.listen_address=10.186.63.114:7474 true dbms.jvm.additional=-XX:+UseG1GC dbms.jvm.additional=-XX:-OmitStackTraceInFastThrow dbms.jvm.additional=-XX:+AlwaysPreTouch dbms.jvm.additional=-XX:+UnlockExperimentalVMOptions dbms.jvm.additional=-XX:+TrustFinalNonStaticFields dbms.jvm.additional=-XX:+DisableExplicitGC dbms.jvm.additional=-Djdk.tls.ephemeralDHKeySize=2048 true dbms.windows_service_name=neo4j dbms.jvm.additional=-Dunsupported.dbms.udc.source=tarball dbms.connectors.default_listen_address=10.186.63.114 dbms.connectors.default_advertised_address=10.186.63.114 dbms.mode=HA ha.server_id=114 ha.initial_hosts=10.186.63.108:5001,10.186.63.112:5001,10.186.63.114:5001 ha.host.data=10.186.63.114:6001 ha.join_timeout=30 dbms.security.procedures.unrestricted=algo.*,apoc.* dbms.jvm.additional=-Dcom.sun.management.jmxremote.port=3637 |

三个节点启动neo4j

1

|

neo4j start

|

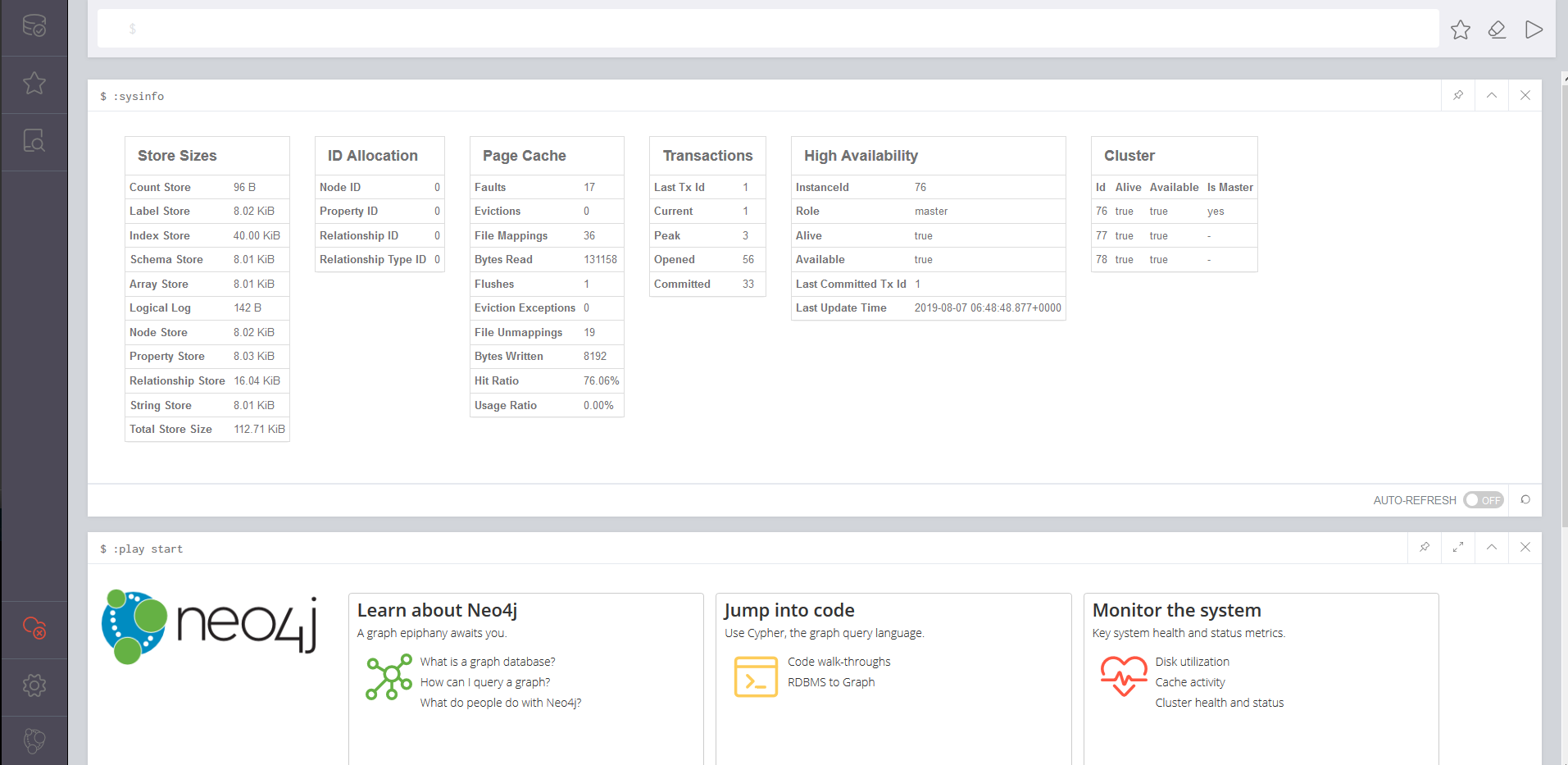

启动完成后可以通过浏览器从任一节点的7474端口查看集群状态

Neo4j重要端口

neo4j重要端口配置如下

| 名称 | 默认 | 相关设置 | 备注 |

|---|---|---|---|

| 备份 | 6362-6372 | dbms.backup.enabled dbms.backup.address |

默认情况下禁用各份功能。在生 产环境中,对此端口的外部访问 由防火墙控制 |

| HTTP服务 | 7474 | 建议在生产环境中不打开此端口 用于外部访问,因为流量未加密,由Neo4j浏览器使用,也由REST API使用 |

|

| HTTPS服务 | 7473 | 由 REST API 使用 | |

| Bolt | 7687 | 由 Cypher Shell 和 Neo4j 浏览器使用 | |

| 因果集群 | 5000,6000,7000 | causal_clustering.discovery_listen_address causal_clustering.transaction_listen_address causal_clustering.raft_listen_address |

列出的端口是 neo4j.conf 中的默认端口,端口在实际安装中可能不同,需要修改 |

| 高性能集群 | 5001,6001 | ha.host.coordination ha.host.data |

列出的端口是 neo4j.conf 中的默认端口,端口在实际安装中可能不同,需要修改 |

| Graphite监控 | 2003 | metrics.graphite.server | Neo4j 数据库与 Graphite 服务器通信的端口 |

| Prometheus监控 | 2004 | metrics.prometheus.enabled and metrics.prometheus.endpoint |

用于 Prometheus 监控的端口 |

| JMX 监控 | 3637 | dbms.jvm.additional=- Dcom.sun.management.jmxremote.port=3637 |

JMX 监控端口,不推荐采用这种监控数据库的方式,默认情况不启用 |

| Neo4j-shell | 1337 | dbms.shell.port=1337 | neo4j-shell 工具已被弃用,建议停止使用 |

安装插件

Neo4j 安装插件APOC和GRAPH ALGORITHMS(每个节点都执行)

下载(注意版本兼容性)

在 https://github.com/neo4j-contrib/neo4j-apoc-procedures/releases 下载apoc扩展包JAR文件

在 https://github.com/neo4j-contrib/neo4j-graph-algorithms/releases 下载algo扩展包JAR文件

将jar包放到Neo4j安装目录下plugins文件夹中

1

|

cp apoc-3.5.0.4-all.jar graph-algorithms-algo-3.5.4.0.jar /data/neo4j/plugins

|

修改配置文件添加以下内容

1

|

dbms.security.procedures.unrestricted=algo.*,apoc.*

|

重启Neo4j

事务日志配置

在实际生产环境中,常常会发生事务日志文件过大的问题,可以通过以下配置限制

事务日志参数

1

|

false>

|

如果设置为true,则无限保留事务日志,会越来越大。

如果设置为false,则只保留最新的非空事务日志。

或者

1

|

type>

|

可供选择的参数列表如下

| Type | Deacription | Example |

|---|---|---|

| files | Number of most recent logical log files to keep | “10 files” |

| size | Max disk size to allow log files to occupy | “300M size” or “1G size” |

| txs | Number of transactions to keep | “250k txs” or “5M txs” |

| hours | Keep logs which contains any transaction committed within N hours from current time |

“10 hours” |

| days | Keep logs which contains any transaction committed within N days from current time |

“50 days” |

推荐配置

1 2 3 4 |

dbms.tx_log.rotation.retention_policy=5G size dbms.checkpoint.interval.time=3600s dbms.checkpoint.interval.tx=1 dbms.tx_log.rotation.size=250M |

保留5G的日志

每3600秒(即一个小时)做一次checkpointing

每个事务都做一次checkpointing

每个日志文件250MB(250MB为默认,数值可以修改)

因果集群搭建

node01节点配置如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

dbms.directories.import=import false true dbms.connector.bolt.listen_address=0.0.0.0:7687 true dbms.connector.http.listen_address=0.0.0.0:7474 true dbms.jvm.additional=-XX:+UseG1GC dbms.jvm.additional=-XX:-OmitStackTraceInFastThrow dbms.jvm.additional=-XX:+AlwaysPreTouch dbms.jvm.additional=-XX:+UnlockExperimentalVMOptions dbms.jvm.additional=-XX:+TrustFinalNonStaticFields dbms.jvm.additional=-XX:+DisableExplicitGC dbms.jvm.additional=-Djdk.tls.ephemeralDHKeySize=2048 true dbms.windows_service_name=neo4j dbms.jvm.additional=-Dunsupported.dbms.udc.source=tarball dbms.connectors.default_listen_address=0.0.0.0 dbms.connectors.default_advertised_address=10.186.63.108 dbms.mode=CORE causal_clustering.minimum_core_cluster_size_at_formation=3 causal_clustering.minimum_core_cluster_size_at_runtime=3 causal_clustering.initial_discovery_members=10.186.63.108:5000,10.186.63.112:5000,10.186.63.114:5000 |

node02节点配置如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

dbms.directories.import=import false true dbms.connector.bolt.listen_address=0.0.0.0:7687 true dbms.connector.http.listen_address=0.0.0.0:7474 true dbms.jvm.additional=-XX:+UseG1GC dbms.jvm.additional=-XX:-OmitStackTraceInFastThrow dbms.jvm.additional=-XX:+AlwaysPreTouch dbms.jvm.additional=-XX:+UnlockExperimentalVMOptions dbms.jvm.additional=-XX:+TrustFinalNonStaticFields dbms.jvm.additional=-XX:+DisableExplicitGC dbms.jvm.additional=-Djdk.tls.ephemeralDHKeySize=2048 true dbms.windows_service_name=neo4j dbms.jvm.additional=-Dunsupported.dbms.udc.source=tarball dbms.connectors.default_listen_address=0.0.0.0 dbms.connectors.default_advertised_address=10.186.63.112 dbms.mode=CORE causal_clustering.minimum_core_cluster_size_at_formation=3 causal_clustering.minimum_core_cluster_size_at_runtime=3 causal_clustering.initial_discovery_members=10.186.63.108:5000,10.186.63.112:5000,10.186.63.114:5000 |

node03节点配置如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

dbms.directories.import=import false true dbms.connector.bolt.listen_address=0.0.0.0:7687 true dbms.connector.http.listen_address=0.0.0.0:7474 true dbms.jvm.additional=-XX:+UseG1GC dbms.jvm.additional=-XX:-OmitStackTraceInFastThrow dbms.jvm.additional=-XX:+AlwaysPreTouch dbms.jvm.additional=-XX:+UnlockExperimentalVMOptions dbms.jvm.additional=-XX:+TrustFinalNonStaticFields dbms.jvm.additional=-XX:+DisableExplicitGC dbms.jvm.additional=-Djdk.tls.ephemeralDHKeySize=2048 true dbms.windows_service_name=neo4j dbms.jvm.additional=-Dunsupported.dbms.udc.source=tarball dbms.connectors.default_listen_address=0.0.0.0 dbms.connectors.default_advertised_address=10.186.63.114 dbms.mode=CORE causal_clustering.minimum_core_cluster_size_at_formation=3 causal_clustering.minimum_core_cluster_size_at_runtime=3 causal_clustering.initial_discovery_members=10.186.63.108:5000,10.186.63.112:5000,10.186.63.114:5000 |

三个节点启动neo4j

1

|

neo4j start

|

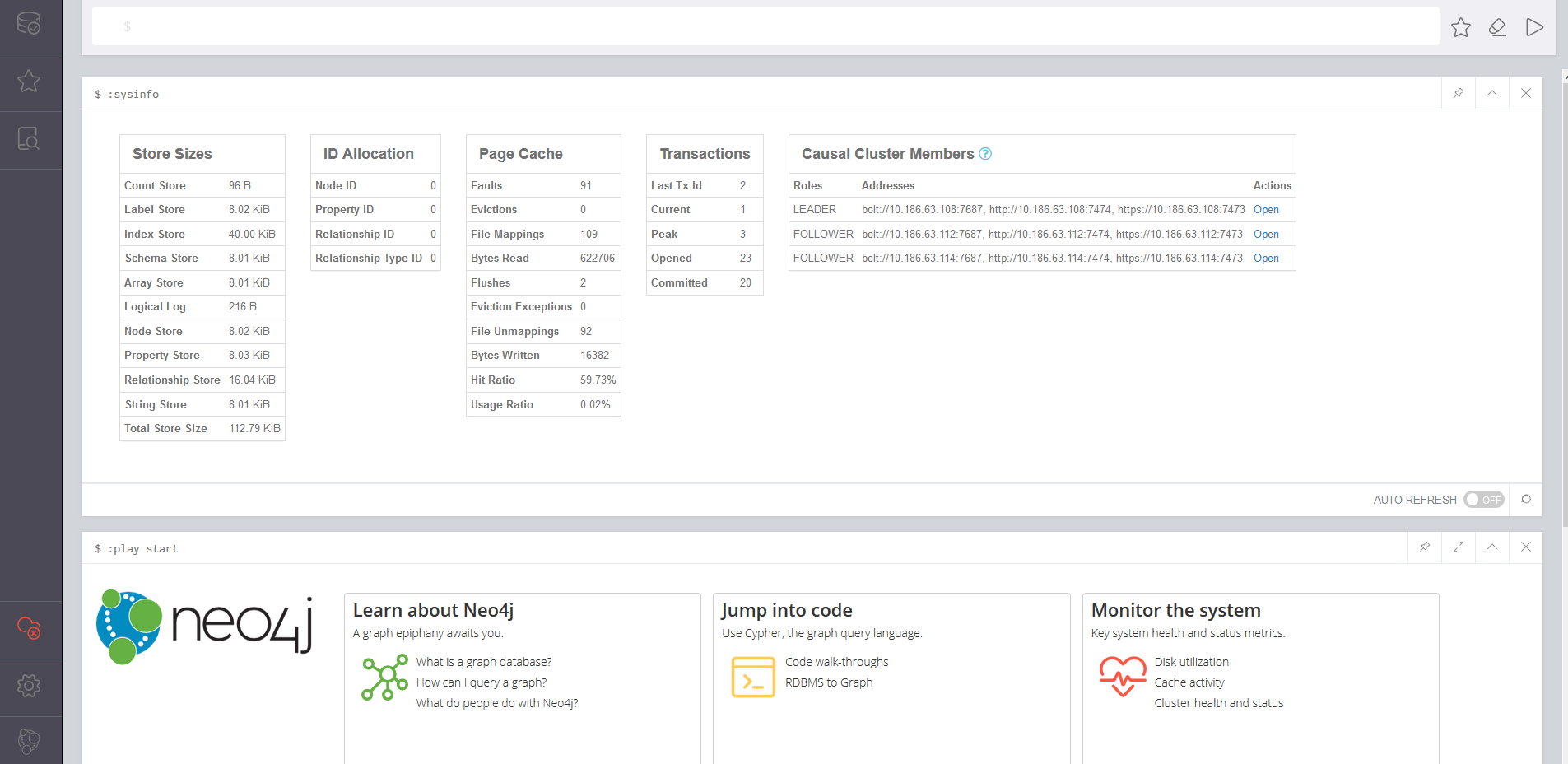

启动完成后可以通过浏览器从任一节点的7474端口查看集群状态

与高可用集群对比,可以看出集群模式并不一样!

集群账户权限控制

LDAP安装配置

yum安装

1

|

yum -y install openldap compat-openldap openldap-clients openldap-servers openldap-servers-sql openldap-devel migrationtools

|

查看版本

1

|

slapd -VV

|

复制文件

1 2 3 4 5 6 7 8 9 10 11 |

# 复制一个默认配置到指定目录下,并授权,这一步一定要做,然后再启动服务,不然生产密码时会报错 cp /usr/share/openldap-servers/DB_CONFIG.example /var/lib/ldap/DB_CONFIG # 授权给ldap用户,此用户yum安装时便会自动创建 chown -R ldap. /var/lib/ldap/DB_CONFIG # 启动服务,先启动服务,配置后面再进行修改 systemctl start slapd enable slapd # 查看状态,正常启动则ok systemctl status slapd |

从openldap2.4.23版本开始,所有配置都保存在/etc/openldap/slapd.d目录下的cn=config文件夹内,不再使用slapd.conf作为配置文件。配置文件的后缀为 ldif,且每个配置文件都是通过命令自动生成的,任意打开一个配置文件,在开头都会有一行注释,说明此为自动生成的文件,请勿编辑,使用ldapmodify命令进行修改。

1

|

# AUTO-GENERATED FILE - DO NOT EDIT!! Use ldapmodify.

|

安装openldap后,会有三个命令用于修改配置文件,分别为ldapadd, ldapmodify, ldapdelete,顾名思义就是添加,修改和删除。而需要修改或增加配置时,则需要先写一个ldif后缀的配置文件,然后通过命令将写的配置更新到slapd.d目录下的配置文件中去,完整的配置过程如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

# 生成管理员密码,记录下这个密码,后面需要用到 slappasswd -s 123456 {SSHA}LSgYPTUW4zjGtIVtuZ8cRUqqFRv1tWpE # 新增修改密码文件,ldif为后缀,文件名随意,不要在/etc/openldap/slapd.d/目录下创建类似文件 # 生成的文件为需要通过命令去动态修改ldap现有配置,如下,我在家目录下,创建文件 cd ~ vim changepwd.ldif ---------------------------------------------------------------------- dn: olcDatabase={0}config,cn=config changetype: modify add: olcRootPW olcRootPW: {SSHA}LSgYPTUW4zjGtIVtuZ8cRUqqFRv1tWpE ---------------------------------------------------------------------- # 这里解释一下这个文件的内容: # 第一行执行配置文件,这里就表示指定为 cn=config/olcDatabase={0}config 文件。你到/etc/openldap/slapd.d/目录下就能找到此文件 # 第二行 changetype 指定类型为修改 # 第三行 add 表示添加 olcRootPW 配置项 # 第四行指定 olcRootPW 配置项的值 # 在执行下面的命令前,你可以先查看原本的olcDatabase={0}config文件,里面是没有olcRootPW这个项的,执行命令后,你再看就会新增了olcRootPW项,而且内容是我们文件中指定的值加密后的字符串 # 执行命令,修改ldap配置,通过-f执行文件 ldapadd -Y EXTERNAL -H ldapi:/// -f changepwd.ldif |

切记不能直接修改/etc/openldap/slapd.d/目录下的配置。

我们需要向 LDAP 中导入一些基本的 Schema。这些 Schema 文件位于 /etc/openldap/schema/ 目录中,schema控制着条目拥有哪些对象类和属性,可以自行选择需要的进行导入,

1

|

done

|

这里选择全部导入。

修改域名

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

# 修改域名,新增changedomain.ldif, 这里我自定义的域名为 yaobili.com,管理员用户账号为admin。 # 如果要修改,则修改文件中相应的dc=yaobili,dc=com为自己的域名 vim changedomain.ldif ------------------------------------------------------------------------- dn: olcDatabase={1}monitor,cn=config changetype: modify replace: olcAccess read by * none dn: olcDatabase={2}hdb,cn=config changetype: modify replace: olcSuffix olcSuffix: dc=actionsky,dc=com dn: olcDatabase={2}hdb,cn=config changetype: modify replace: olcRootDN olcRootDN: cn=manager,dc=actionsky,dc=com dn: olcDatabase={2}hdb,cn=config changetype: modify replace: olcRootPW olcRootPW: {SSHA}xlpeqLofS/M7zXLReCrgn+mC9FSel8eL dn: olcDatabase={2}hdb,cn=config changetype: modify add: olcAccess write by anonymous auth by self write by * none read read ------------------------------------------------------------------------- # 执行命令,修改配置 ldapmodify -Y EXTERNAL -H ldapi:/// -f changedomain.ldif |

启用memberof功能

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 |

# 新增add-memberof.ldif, #开启memberof支持并新增用户支持memberof配置 vim add-memberof.ldif ------------------------------------------------------------- dn: cn=module{0},cn=config cn: modulle{0} objectClass: olcModuleList objectclass: top olcModuleload: memberof.la olcModulePath: /usr/lib64/openldap dn: olcOverlay={0}memberof,olcDatabase={2}hdb,cn=config objectClass: olcConfig objectClass: olcMemberOf objectClass: olcOverlayConfig objectClass: top olcOverlay: memberof olcMemberOfDangling: ignore olcMemberOfRefInt: TRUE olcMemberOfGroupOC: groupOfUniqueNames olcMemberOfMemberAD: uniqueMember olcMemberOfMemberOfAD: memberOf ------------------------------------------------------------- # 新增refint1.ldif文件 vim refint1.ldif ------------------------------------------------------------- dn: cn=module{0},cn=config add: olcmoduleload olcmoduleload: refint ------------------------------------------------------------- # 新增refint2.ldif文件 vim refint2.ldif ------------------------------------------------------------- dn: olcOverlay=refint,olcDatabase={2}hdb,cn=config objectClass: olcConfig objectClass: olcOverlayConfig objectClass: olcRefintConfig objectClass: top olcOverlay: refint olcRefintAttribute: memberof uniqueMember manager owner ------------------------------------------------------------- # 依次执行下面命令,加载配置,顺序不能错 ldapadd -Q -Y EXTERNAL -H ldapi:/// -f add-memberof.ldif ldapmodify -Q -Y EXTERNAL -H ldapi:/// -f refint1.ldif ldapadd -Q -Y EXTERNAL -H ldapi:/// -f refint2.ldif |

到此,配置修改完了,在上述基础上,我们来创建一个叫做actionsky company的组织,并在其下创建一个manager的组织角色(该组织角色内的用户具有管理整个 LDAP 的权限):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

# 新增配置文件 vim base.ldif ---------------------------------------------------------- dn: dc=actionsky,dc=com objectClass: top objectClass: dcObject objectClass: organization o: actionsky Company dc: actionsky dn: cn=manager,dc=actionsky,dc=com objectClass: organizationalRole cn: manager ---------------------------------------------------------- # 执行命令,添加配置, 这里要注意修改域名为自己配置的域名,然后需要输入上面我们生成的密码 ldapadd -x -D cn=manager,dc=actionsky,dc=com -W -f base.ldif |

Neo4j权限控制

集群模式的neo4j权限控制是基于角色的访问控制管理方式。neo4j提供以下内置角色:

reader

- Read-only access to the data graph (all nodes, relationships, properties).

editor

- Read/write access to the data graph.

- Write access limited to creating and changing existing properties key, node labels, and

relationship types of the graph.

publisher

- Read/write access to the data graph.

architect

- Read/write access to the data graph.

- Set/delete access to indexes along with any other future schema constructs.

admin

- Read/write access to the data graph.

- Set/delete access to indexes along with any other future schema constructs.

- View/terminate queries.

内置角色概述:

| Action | reader | editor | publisher | architest | admin | (no role) | Available in Community Edition |

|---|---|---|---|---|---|---|---|

| Change own password |

√ | √ | √ | √ | √ | √ | √ |

| View own details |

√ | √ | √ | √ | √ | √ | √ |

| Read data | √ | √ | √ | √ | √ | √ | |

| View own queries |

√ | √ | √ | √ | √ | ||

| Terminate own queries |

√ | √ | √ | √ | √ | ||

| Write/update /delete data |

√ | √ | √ | √ | √ | ||

| Create new types of properties key |

√ | √ | √ | √ | |||

| Create new types of nodes labels |

√ | √ | √ | √ | |||

| Create new types of relationship types |

√ | √ | √ | √ | |||

| Create/drop index/constra int |

√ | √ | √ | ||||

| Create/delete user |

√ | √ | |||||

| Change another user’s password |

√ | ||||||

| Assign/remov e role to/from user |

√ | ||||||

| Suspend/acti vate user |

√ | ||||||

| View all users | √ | √ | |||||

| View all roles | √ | ||||||

| View all roles for a user |

√ | ||||||

| View all users for a role |

√ | ||||||

| View all queries |

√ | ||||||

| Terminate all queries |

√ | ||||||

| Dynamically change configuration (see Dynamic settings) |

√ |

接下来继续配置ldap,创建用户对应neo4j的角色:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 |

#新增增加用户的配置文件 vim user.ldif ----------------------------------------------------------------------------------------------- dn: ou=users,dc=actionsky,dc=com objectClass: organizationalUnit objectClass: top ou: users dn: ou=groups,dc=actionsky,dc=com objectClass: organizationalUnit objectClass: top ou: groups dn: cn=reader,ou=groups,dc=actionsky,dc=com objectClass: groupOfNames objectClass: top cn: reader member:uid=reader,ou=users,dc=actionsky,dc=com dn: cn=editor,ou=groups,dc=actionsky,dc=com objectClass: groupOfNames objectClass: top cn: editor member:uid=editor,ou=users,dc=actionsky,dc=com dn: cn=publisher,ou=groups,dc=actionsky,dc=com objectClass: groupOfNames objectClass: top cn: publisher member:uid=publisher,ou=users,dc=actionsky,dc=com dn: cn=architect,ou=groups,dc=actionsky,dc=com objectClass: groupOfNames objectClass: top cn: architect member:uid=architect,ou=users,dc=actionsky,dc=com dn: cn=admin,ou=groups,dc=actionsky,dc=com objectClass: groupOfNames objectClass: top cn: admin member:uid=admin,ou=users,dc=actionsky,dc=com dn: uid=reader,ou=users,dc=actionsky,dc=com objectClass: organizationalPerson objectClass: person objectClass: extensibleObject objectClass: uidObject objectClass: inetOrgPerson objectClass: top cn: Reader User givenName: Reader sn: reader uid: reader mail: reader@actionsky.com ou: users test memberOf: cn=reader,ou=groups,dc=actionsky,dc=com dn: uid=editor,ou=users,dc=actionsky,dc=com objectClass: organizationalPerson objectClass: person objectClass: extensibleObject objectClass: uidObject objectClass: inetOrgPerson objectClass: top cn: Editor User givenName: Editor sn: editor uid: editor mail: editor@actionsky.com ou: users test memberOf: cn=editor,ou=groups,dc=actionsky,dc=com dn: uid=publisher,ou=users,dc=actionsky,dc=com objectClass: organizationalPerson objectClass: person objectClass: extensibleObject objectClass: uidObject objectClass: inetOrgPerson objectClass: top cn: Publisher User givenName: Publisher sn: publisher uid: publisher mail: publisher@actionsky.com ou: users test memberOf: cn=publisher,ou=groups,dc=actionsky,dc=com dn: uid=architect,ou=users,dc=actionsky,dc=com objectClass: organizationalPerson objectClass: person objectClass: extensibleObject objectClass: uidObject objectClass: inetOrgPerson objectClass: top cn: Architect User givenName: Architect sn: architect uid: architect mail: architect@actionsky.com ou: users test memberOf: cn=architect,ou=groups,dc=actionsky,dc=com dn: uid=admin,ou=users,dc=actionsky,dc=com objectClass: organizationalPerson objectClass: person objectClass: extensibleObject objectClass: uidObject objectClass: inetOrgPerson objectClass: top cn: Admin User givenName: Architect sn: admin uid: admin mail: admin@actionsky.com ou: users test memberOf: cn=admin,ou=groups,dc=actionsky,dc=com ----------------------------------------------------------------------------------------------- #导入ldap ldapadd -x -D cn=manager,dc=actionsky,dc=com -W -f user.ldif #如果修改时候,再次导入增加 -a 参数如下: ldapmodify -a -x -D cn=manager,dc=actionsky,dc=com -W -f user.ldif |

接下里继续配置neo4j,在集群内每个节点添加以下配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

true dbms.security.auth_provider=ldap #配置ldap服务器的地址和端口 dbms.security.ldap.host=ldap://10.186.61.39:389 dbms.security.ldap.authentication.mechanism=simple dbms.security.ldap.authentication.user_dn_template=uid={0},ou=users,dc=actionsky,dc=com false true #连接ldap服务器的账户和密码 dbms.security.ldap.authorization.system_username=cn=manager,dc=actionsky,dc=com dbms.security.ldap.authorization.system_password=123456 dbms.security.ldap.authorization.user_search_base=ou=users,dc=actionsky,dc=com dbms.security.ldap.authorization.user_search_filter=(&(objectClass=*)(uid={0})) dbms.security.ldap.authorization.group_membership_attributes=memberOf dbms.security.ldap.authorization.group_to_role_mapping=\ = reader; \ = editor; \ = publisher; \ = architect; \ = admin |

重启neo4j集群。

登陆browser界面输入 :server connect 连接neo4j数据库,可以在 Database Information 界面看到连接的 Username 和 Roles,然后验证角色权限。

Haproxy安装和配置

在node01、node02上安装

安装工具等

1

|

yum install gcc gcc-c++ glibc glibc-devel pcre pcre-devel openssl openssl-devel systemd-devel net-tools vim iotop bc zip unzip zlib-devel lrzsz tree screen lsof tcpdump wget ntpdate

|

编译安装

下载

1 2 3 |

wget https://www.haproxy.org/download/2.0/src/haproxy-2.0.4.tar.gz tar -zxvf haproxy-2.0.4.tar.gz cd haproxy-2.0.4 |

查看编译方法

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

# less INSTALL To build haproxy, you have to choose your target OS amongst the following ones and assign it to the TARGET variable : for Linux kernel 2.6.28 and above for Solaris 8 or 10 (others untested) for FreeBSD 5 to 12 (others untested) for NetBSD for Mac OS/X for OpenBSD 5.7 and above for AIX 5.1 for AIX 5.2 for Cygwin for Haiku for any other OS or version. - custom to manually adjust every setting You may also choose your CPU to benefit from some optimizations. This is particularly important on UltraSparc machines. For this, you can assign one of the following choices to the CPU variable : for intel PentiumPro, Pentium 2 and above, AMD Athlon (32 bits) for intel Pentium, AMD K6, VIA C3. - ultrasparc : Sun UltraSparc I/II/III/IV processor 's specific processor optimizations. Use with extreme care, and never in virtualized environments (known to break). - generic : any other processor or no CPU-specific optimization. (default) |

开始编译安装

1 2 3 4 |

make TARGET=linux-glibc ARCH=generic PREFIX=/data/haproxy make install PREFIX=/data/haproxy ln -s /data/haproxy/sbin/haproxy /usr/sbin/haproxy which haproxy |

RPM安装

1

|

rpm -ivh rpm -ivh haproxy-2.0.4-1.el7.x86_64.rpm

|

安装完成后,检查版本

1 2 |

# haproxy -v HA-Proxy version 2.0.4 2019/08/06 - https://haproxy.org/ |

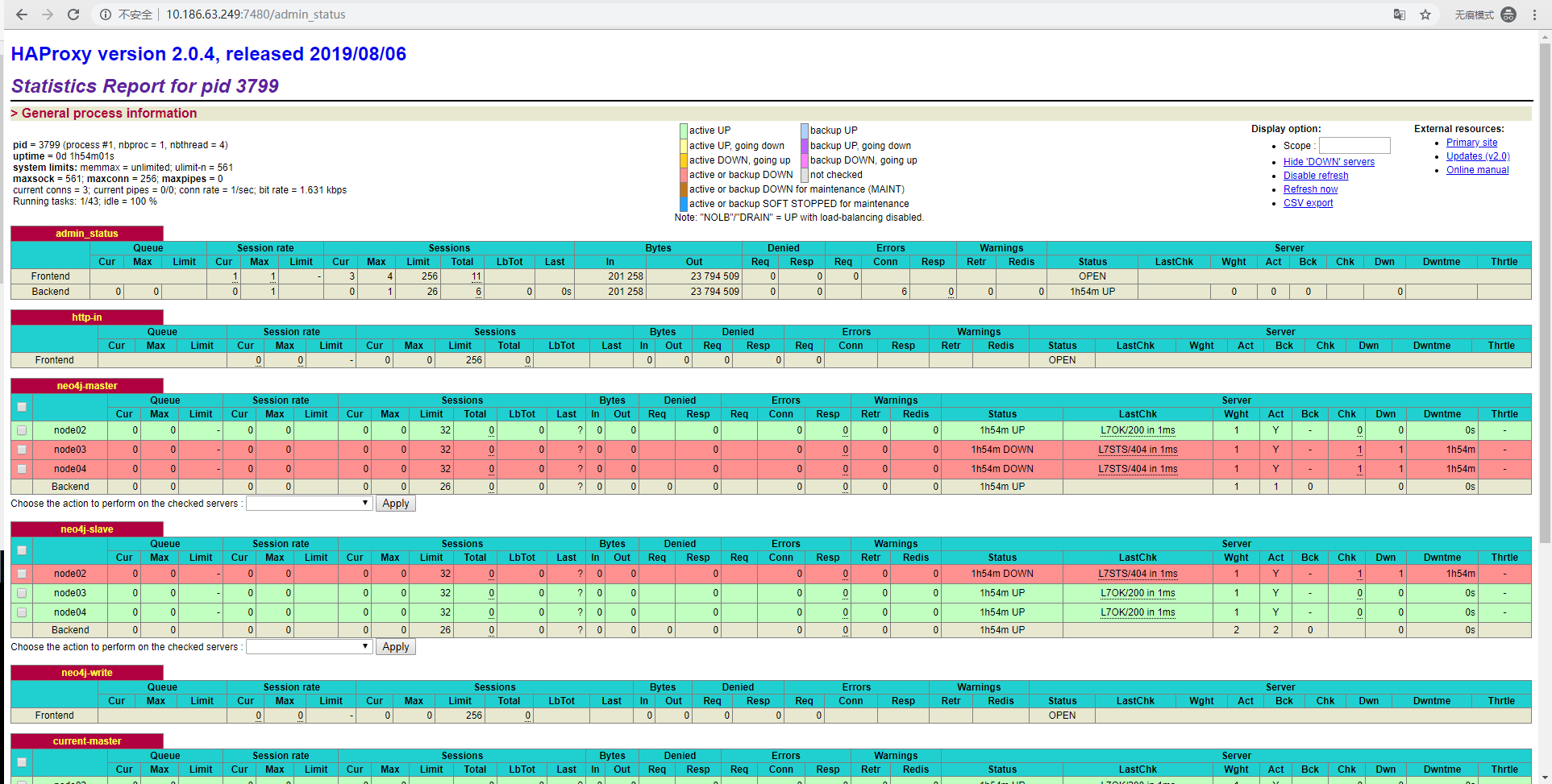

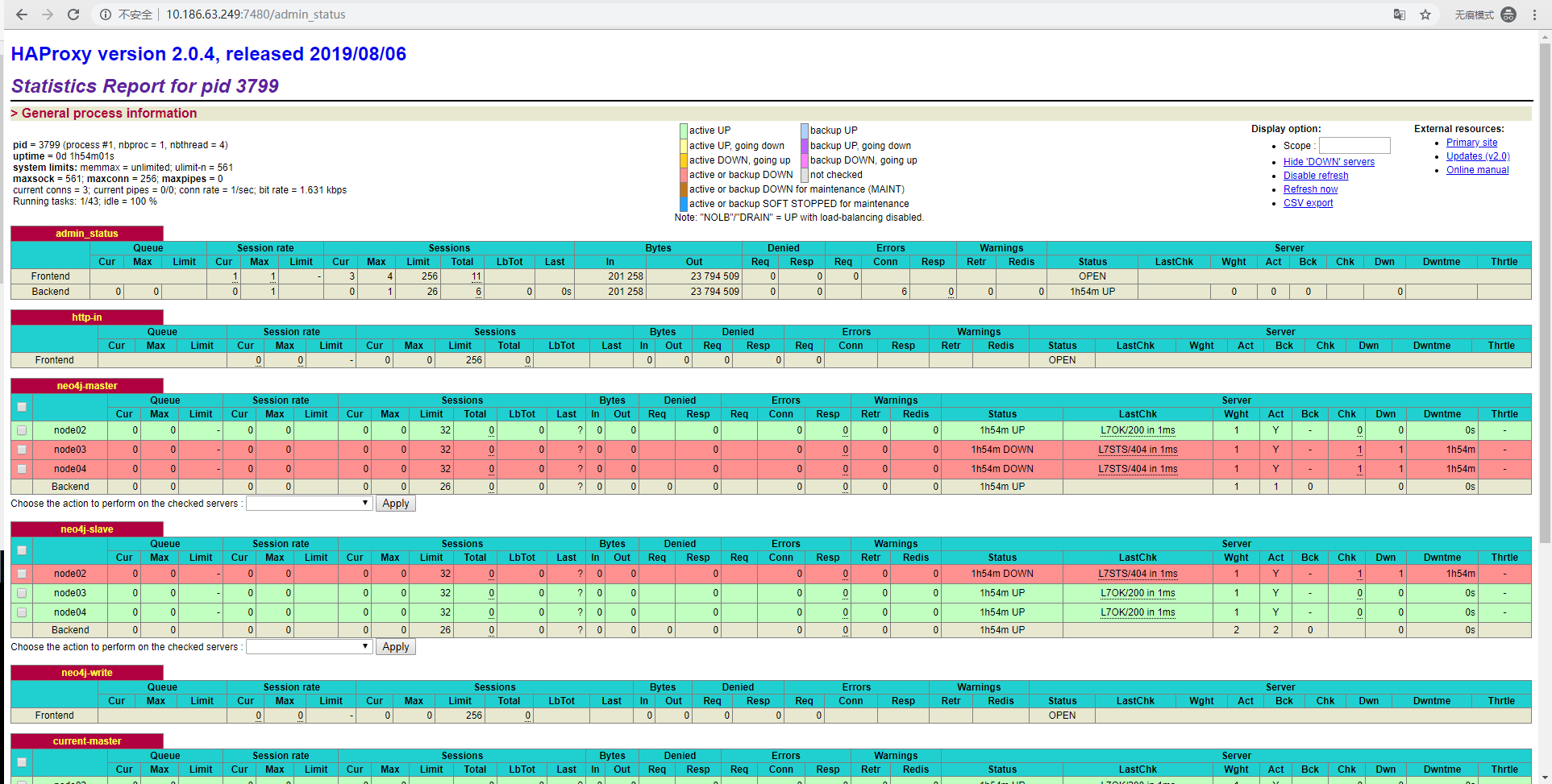

Haproxy配置

编辑配置文件vim /data/haproxy/conf/haproxy.cfg,以node01为例,内容如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 |

global daemon maxconn 256 stats socket /var/run/haproxy.sock mode 600 level admin stats timeout 2m log 127.0.0.1 local0 #log 127.0.0.1 local0 info #log 127.0.0.1 local1 warning defaults #mode http #option httplog timeout connect 5000ms timeout client 50000ms timeout server 50000ms log global #listen admin #mode http #bind 10.186.63.108:7480 #stats enable #stats realm Haproxy\ Statistics #为haproxy访问状态监控页面配置,取名为admin_stats #http的7层模式 #监听端口 #启用监听端口 # 继承global中log的定义 #监控页面的url访问路径,即http://ip/admin_tats访问监控页面 #监控页面的密码框提示信息 #监控页面的用户和密码admin,可以设置多个用户名 #stats hide-version #隐藏统计页面上HAProxy的版本信息 #当通过认证才可管理 #页面自动刷新时间30s frontend http-in mode http bind *:17474 acl write_method method POST DELETE PUT acl write_hdr hdr_val(X-Write) eq 1 acl write_payload payload(0,0) -m reg -i CREATE|MERGE|SET|DELETE|REMOVE acl tx_cypher_endpoint path_beg /db/data/transaction if tx_cypher_endpoint if write_hdr if tx_cypher_endpoint write_payload if tx_cypher_endpoint if write_method default_backend neo4j-slave log global backend neo4j-master mode http #option httpchk GET /db/manage/server/ha/master HTTP/1.0\r\nAuthorization:\ Basic\ bmVvNGo6MTIz option httpchk GET /db/manage/server/ha/master HTTP/1.0 server node01 10.186.63.108:7474 maxconn 32 check server node02 10.186.63.112:7474 maxconn 32 check server node03 10.186.63.114:7474 maxconn 32 check backend neo4j-slave mode http #option httpchk GET /db/manage/server/ha/available HTTP/1.0\r\nAuthorization:\ Basic\ bmVvNGo6MTIz option httpchk GET /db/manage/server/ha/slave HTTP/1.0 #balance uri acl tx_cypher_endpoint var(txn.tx_cypher_endpoint),bool # slightly higher with org.neo4j.server.transaction.timeout if tx_cypher_endpoint if tx_cypher_endpoint server node01 10.186.63.108:7474 maxconn 32 check server node02 10.186.63.112:7474 maxconn 32 check server node03 10.186.63.114:7474 maxconn 32 check # Configuring HAProxy for the Bolt Protocol frontend neo4j-write mode tcp bind *:7680 default_backend current-master backend current-master option httpchk HEAD /db/manage/server/ha/master HTTP/1.0 server node01 10.186.63.108:7687 check port 7474 server node02 10.186.63.112:7687 check port 7474 server node03 10.186.63.114:7687 check port 7474 frontend neo4j-read mode tcp bind *:7681 default_backend current-slaves backend current-slaves balance roundrobin option httpchk HEAD /db/manage/server/ha/slave HTTP/1.0 server node01 10.186.63.108:7687 check port 7474 server node02 10.186.63.112:7687 check port 7474 server node03 10.186.63.114:7687 check port 7474 |

配置文件中端口配置,因为资源限制,出现端口已经被neo4j占用,所以端口号改变。

启动

1

|

haproxy -f /data/haproxy/conf/haproxy.cfg

|

Haproxy日志配置

自定义日志输出位置

上述haproxy配置文件中,所有日志导向于local0,是Centos7上rsyslog的一个日志保留接口。

配置rsyslog,编辑文件/etc/rsyslog.conf

以下几行取消注释

1 2 3 4 5 6 7 |

# Provides UDP syslog reception $ModLoad imudp $UDPServerRun 514 # Provides TCP syslog reception $ModLoad imtcp $InputTCPServerRun 514 |

添加以下内容

1 2 |

# Save haproxy log local0.* /data/haproxy/logs/haproxy.log |

为了防止haproxy错误日志会输出到终端,注释行

1

|

*.emerg :omusrmsg:*

|

添加

1

|

log/messages

|

重启

1

|

systemctl restart rsyslog

|

可以在文件/data/haproxy/logs/haproxy.log中看到日志内容。

自定义日志格式

haproxy默认的日志可读性很差,我们需要显示更多的信息,但默认的都捕获不到,需要自定义需要显示的内容。

编辑配置文件vim /data/haproxy/conf/haproxy.cfg,在frontend http-in配置中添加以下内容:

1 2 3 4 5 6 7 8 9 10 |

capture request header Host len 64 capture request header User-Agent len 128 capture request header X-Forwarded-For len 100 capture request header Referer len 200 capture response header Server len 40 capture response header Server-ID len 40 #capture捕获信息 log-format %ci:%cp\ %si:%sp\ %B\ %U\ %ST\ %r\ %f\ %b\ %bi\ %hrl\ %hsl\ #log-forcat定义日志需显示内容(变量) #利用capture捕获信息,log-format定义变量 |

对于tcp模式请求,日志配置如下:

1 2 3 4 5 6 7 8 9 10 |

capture request header Host len 64 capture request header User-Agent len 128 capture request header X-Forwarded-For len 100 capture request header Referer len 200 capture response header Server len 40 capture response header Server-ID len 40 #capture捕获信息 log-format %ci:%cp\ %si:%sp\ %B\ %U\ %ST\ %f\ %b\ %bi\ %hrl\ %hsl\ #log-forcat定义日志需显示内容(变量) #利用capture捕获信息,log-format定义变量 |

自定义日志格式官网参考

| R | var | field name (8.2.2 and 8.2.3 for description) | type |

|---|---|---|---|

| %o | special variable, apply flags on all next var | ||

| %B | bytes_read (from server to client) | numeric | |

| H | %CC | captured_request_cookie | string |

| H | %CS | captured_response_cookie | string |

| %H | hostname | string | |

| H | %HM | HTTP method (ex: POST) | string |

| H | %HP | HTTP request URI without query string (path) | string |

| H | %HQ | HTTP request URI query string (ex: ?bar=baz) | string |

| H | %HU | HTTP request URI (ex: /foo?bar=baz) | string |

| H | %HV | HTTP version (ex: HTTP/1.0) | string |

| %ID | unique-id | string | |

| %ST | status_code | numeric | |

| %T | gmt_date_time | date | |

| %Ta | Active time of the request (from TR to end) | numeric | |

| %Tc | Tc | numeric | |

| %Td | Td = Tt – (Tq + Tw + Tc + Tr) | numeric | |

| %Tl | local_date_time | date | |

| %Th | connection handshake time (SSL, PROXY proto) | numeric | |

| H | %Ti | idle time before the HTTP request | numeric |

| H | %Tq | Th + Ti + TR | numeric |

| H | %TR | time to receive the full request from 1st byte | numeric |

| H | %Tr | Tr (response time) | numeric |

| %Ts | timestamp | numeric | |

| %Tt | Tt | numeric | |

| %Tw | Tw | numeric | |

| %U | bytes_uploaded (from client to server) | numeric | |

| %ac | actconn | numeric | |

| %b | backend_name | string | |

| %bc | beconn (backend concurrent connections) | numeric | |

| %bi | backend_source_ip (connecting address) | IP | |

| %bp | backend_source_port (connecting address) | numeric | |

| %bq | backend_queue | numeric | |

| %ci | client_ip (accepted address) | IP | |

| %cp | client_port (accepted address) | numeric | |

| %f | frontend_name | string | |

| %fc | feconn (frontend concurrent connections) | numeric | |

| %fi | frontend_ip (accepting address) | IP | |

| %fp | frontend_port (accepting address) | numeric | |

| %ft | frontend_name_transport (‘~’ suffix for SSL) | string | |

| %lc | frontend_log_counter | numeric | |

| %hr | captured_request_headers default style | string | |

| %hrl | captured_request_headers CLF style | string list | |

| %hs | captured_response_headers default style | string | |

| %hsl | captured_response_headers CLF style | string list | |

| %ms | accept date milliseconds (left-padded with 0) | numeric | |

| %pid | PID | numeric | |

| H | %r | http_request | string |

| %rc | retries | numeric | |

| %rt | request_counter (HTTP req or TCP session) | numeric | |

| %s | server_name | string | |

| %sc | srv_conn (server concurrent connections) | numeric | |

| %si | server_IP (target address) | IP | |

| %sp | server_port (target address) | numeric | |

| %sq | srv_queue | numeric | |

| S | %sslc | ssl_ciphers (ex: AES-SHA) | string |

| S | %sslv | ssl_version (ex: TLSv1) | string |

| %t | date_time (with millisecond resolution) | date | |

| H | %tr | date_time of HTTP request | date |

| H | %trg | gmt_date_time of start of HTTP request | date |

| H | %trl | local_date_time of start of HTTP request | date |

| %ts | termination_state | string | |

| H | %tsc | termination_state with cookie status | string |

R = Restrictions ; H = mode http only ; S = SSL only

重启haproxy后进行访问测试,截取一条日志内容:

1

|

Sep 11 10:30:49 localhost haproxy[8142]: 192.168.3.9:9406 10.186.63.114:7474 7435 398 200 GET /browser/assets/neo4j-world-16a20d139c28611513ec675e56d16d41.png HTTP/1.1 http-in neo4j-slave 10.186.63.108 10.186.63.249:17474 Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:69.0) Gecko/20100101 Firefox/69.0 - http://10.186.63.249:17474/browser/ - -\

|

可以看到日志内容已经更丰富,需要的信息已记录。

Keepalive安装和配置

申请VIP为10.186.63.249

在node01、node02上安装

安装编辑工具等

1

|

yum install gcc openssl-devel popt-devel libnl libnl-devel libnfnetlink-devel libmnl-devel ipset-devel net-snmp-devel -y

|

编译安装

下载

1 2 3 |

wget https://www.keepalived.org/software/keepalived-2.0.18.tar.gz tar -zxvf keepalived-2.0.18.tar.gz cd keepalived-2.0.18 |

开始编译安装

1 2 3 |

./configure --prefix=/data/keepalived make make install |

创建配置文件目录

1

|

mkdir /etc/keepalived

|

复制配置文件、创建软连接

1 2 3 4 |

cp /data/keepalived/etc/keepalived/keepalived.conf/etc/keepalived cp /data/backup/keepalived-2.0.18/keepalived/etc/init.d/keepalived /etc/init.d cp /data/keepalived/etc/sysconfig/keepalived /etc/sysconfig ln -s /data/keepalived/sbin/keepalived /usr/sbin |

RPM安装

1

|

rpm -ivh keepalived-2.0.18-1.el7.x86_64.rpm

|

安装完成后,检查版本

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# keepalived -v Keepalived v2.0.18 (07/26,2019) Copyright(C) 2001-2019 Alexandre Cassen, <acassen@gmail.com> for Linux 3.10.0 #1 SMP Tue Jun 18 16:35:19 UTC 2019 configure options: --prefix=/data/keepalived Config options: LVS VRRP VRRP_AUTH OLD_CHKSUM_COMPAT FIB_ROUTING System options: PIPE2 SIGNALFD INOTIFY_INIT1 VSYSLOG EPOLL_CREATE1 IPV6_ADVANCED_API LIBNL1 RTA_ENCAP RTA_EXPIRES RTA_PREF FRA_TUN_ID RTAX_CC_ALGO RTAX_QUICKACK FRA_OIFNAME IFA_FLAGS IP_MULTICAST_ALL NET_LINUX_IF_H_COLLISION LIBIPTC_LINUX_NET_IF_H_COLLISION LIBIPVS_NETLINK VRRP_VMAC IFLA_LINK_NETNSID CN_PROC SOCK_NONBLOCK SOCK_CLOEXEC O_PATH GLOB_BRACE INET6_ADDR_GEN_MODE SO_MARK SCHED_RT SCHED_RESET_ON_FORK |

Keepalive配置

编辑配置文件vim vim /etc/keepalived/keepalived.conf,node01上内容如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

for keepalived vrrp_script check_haproxy { interval 2 weight 2 } global_defs { script_user root enable_script_security notification_email { acassen@firewall.loc } router_id keepalived_VIP } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 100 nopreempt priority 150 advert_int 1 authentication { auth_type PASS auth_pass 123456 } virtual_ipaddress { 10.186.63.249 } track_script { check_haproxy } } |

node02上内容如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

for keepalived vrrp_script check_haproxy { interval 2 weight 2 } global_defs { script_user root enable_script_security notification_email { acassen@firewall.loc } router_id keepalived_VIP } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 100 nopreempt priority 100 advert_int 1 authentication { auth_type PASS auth_pass 123456 } virtual_ipaddress { 10.186.63.249 } track_script { check_haproxy } } |

配置文件解释:

- node01为主,state为MASTER;node02为从,state为BACKUP

- router_id保持一致

- virtual_router_id保持一致

- interface为绑定VIP的网卡名

- node01上priority大于node02上priority的值,即MASTER的值大于BACKUP

在/etc/keepalived目录下创建检测haproxy脚本,脚本内容如下(node01、node02相同)

1 2 3 4 5 6 |

# cat check_haproxy.sh #!/bin/bash h=`ps -C haproxy --no-header |wc -l` then systemctl stop keepalived fi |

授权

1

|

chmod a+x check_haproxy.sh

|

node01操作

启动keepalived

1

|

systemctl start keepalived

|

查看状态

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

# systemctl status keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled) Active: active (running) since Tue 2019-08-20 14:04:02 CST; 24min ago $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS) Main PID: 3813 (keepalived) Tasks: 2 Memory: 744.0K CGroup: /system.slice/keepalived.service ├─3813 /data/keepalived/sbin/keepalived -D └─3814 /data/keepalived/sbin/keepalived -D for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 |

查看网卡ip,只展示eth0的ip

1 2 3 4 5 6 7 8 9 |

# ip addr 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 02:00:0a:ba:3f:6c brd ff:ff:ff:ff:ff:ff inet 10.186.63.108/24 brd 10.186.63.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.186.63.249/32 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::aff:feba:3f6c/64 scope link valid_lft forever preferred_lft forever |

node02操作

启动keepalived

1

|

systemctl start keepalived

|

查看状态

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

# systemctl status keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled) Active: active (running) since Tue 2019-08-20 15:53:24 CST; 4s ago $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS) Main PID: 24075 (keepalived) Tasks: 2 Memory: 784.0K CGroup: /system.slice/keepalived.service ├─24075 /usr/sbin/keepalived -D └─24076 /usr/sbin/keepalived -D Aug 20 15:53:24 node3 systemd[1]: Started LVS and VRRP High Availability Monitor. '/etc/keepalived/keepalived.conf'. for interface eth0 for interface eth0 Aug 20 15:53:24 node3 Keepalived_vrrp[24076]: Registering gratuitous ARP shared channel Aug 20 15:53:24 node3 Keepalived_vrrp[24076]: (VI_1) removing VIPs. Aug 20 15:53:24 node3 Keepalived_vrrp[24076]: (VI_1) Entering BACKUP STATE (init) Aug 20 15:53:24 node3 Keepalived_vrrp[24076]: VRRP sockpool: [ifindex(2), family(IPv4), proto(112), unicast(0), fd(11,12)] Aug 20 15:53:24 node3 Keepalived_vrrp[24076]: VRRP_Script(check_haproxy) succeeded Aug 20 15:53:24 node3 Keepalived_vrrp[24076]: (VI_1) Changing effective priority from 100 to 102 |

查看网卡ip,只展示eth0的ip

1 2 3 4 5 6 7 |

# ip addr 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 02:00:0a:ba:3f:70 brd ff:ff:ff:ff:ff:ff inet 10.186.63.112/24 brd 10.186.63.255 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::aff:feba:3f70/64 scope link valid_lft forever preferred_lft forever |

可以看到此时,VIP绑定在node01上,通过VIP访问haproxy

验证IP漂移

手动停止node01上haproxy进程,然后查看keepalive进程是否存在

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

# ps -ef|grep haproxy root 17229 1 0 16:06 ? 00:00:08 haproxy -f /data/haproxy/conf/haproxy.cfg root 22793 2499 0 16:51 pts/1 00:00:00 grep --color=auto haproxy # kill 17229 # systemctl status keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled) Active: inactive (dead) for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 Aug 20 16:51:25 node2 systemd[1]: Stopping LVS and VRRP High Availability Monitor... Aug 20 16:51:25 node2 Keepalived[22154]: Stopping Aug 20 16:51:25 node2 Keepalived_vrrp[22155]: (VI_1) sent 0 priority Aug 20 16:51:25 node2 Keepalived_vrrp[22155]: (VI_1) removing VIPs. Aug 20 16:51:26 node2 Keepalived_vrrp[22155]: Stopped - used 0.022975 user time, 0.167588 system time Aug 20 16:51:26 node2 Keepalived[22154]: Stopped Keepalived v2.0.18 (07/26,2019) Aug 20 16:51:26 node2 systemd[1]: Stopped LVS and VRRP High Availability Monitor. # ip addr 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 02:00:0a:ba:3f:6c brd ff:ff:ff:ff:ff:ff inet 10.186.63.108/24 brd 10.186.63.255 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::aff:feba:3f6c/64 scope link valid_lft forever preferred_lft forever |

可以看到keepalive服务已经停止,VIP已经不在绑定在node01上。

到node02上验证

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

# systemctl status keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled) Active: active (running) since Tue 2019-08-20 16:50:59 CST; 1min 25s ago $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS) Main PID: 12802 (keepalived) Tasks: 2 Memory: 684.0K CGroup: /system.slice/keepalived.service ├─12802 /usr/sbin/keepalived -D └─12803 /usr/sbin/keepalived -D for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 # ip addr 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 02:00:0a:ba:3f:70 brd ff:ff:ff:ff:ff:ff inet 10.186.63.112/24 brd 10.186.63.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.186.63.249/32 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::aff:feba:3f70/64 scope link valid_lft forever preferred_lft forever |

可以看到VIP已经漂移到node02上,通过VIP访问haproxy

可以看到haproxy访问依旧正常。

然后启动node01上的haproxy和keepalive,验证VIP是否会回到node01

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

# haproxy -f /data/haproxy/conf/haproxy.cfg # ps -ef|grep haproxy root 22830 1 0 16:55 ? 00:00:00 haproxy -f /data/haproxy/conf/haproxy.cfg root 22835 2499 0 16:55 pts/1 00:00:00 grep --color=auto haproxy # systemctl start keepalived # systemctl status keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled) Active: active (running) since Tue 2019-08-20 16:56:08 CST; 4s ago $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS) Main PID: 22845 (keepalived) Tasks: 2 Memory: 664.0K CGroup: /system.slice/keepalived.service ├─22845 /data/keepalived/sbin/keepalived -D └─22846 /data/keepalived/sbin/keepalived -D Aug 20 16:56:11 node2 Keepalived_vrrp[22846]: (VI_1) received lower priority (102) advert from 10.186.63.112 - discarding Aug 20 16:56:11 node2 Keepalived_vrrp[22846]: (VI_1) Receive advertisement timeout Aug 20 16:56:11 node2 Keepalived_vrrp[22846]: (VI_1) Entering MASTER STATE Aug 20 16:56:11 node2 Keepalived_vrrp[22846]: (VI_1) setting VIPs. for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 # ip addr 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 02:00:0a:ba:3f:6c brd ff:ff:ff:ff:ff:ff inet 10.186.63.108/24 brd 10.186.63.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.186.63.249/32 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::aff:feba:3f6c/64 scope link valid_lft forever preferred_lft forever |

可以看到当haproxy和keepalive启动后,VIP会回到node01。

再去node02上验证

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

# systemctl status keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled) Active: active (running) since Tue 2019-08-20 16:50:59 CST; 7min ago $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS) Main PID: 12802 (keepalived) Tasks: 2 Memory: 712.0K CGroup: /system.slice/keepalived.service ├─12802 /usr/sbin/keepalived -D └─12803 /usr/sbin/keepalived -D for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 for 10.186.63.249 Aug 20 16:56:11 node3 Keepalived_vrrp[12803]: (VI_1) Master received advert from 10.186.63.108 with higher priority 152, ours 102 Aug 20 16:56:11 node3 Keepalived_vrrp[12803]: (VI_1) Entering BACKUP STATE Aug 20 16:56:11 node3 Keepalived_vrrp[12803]: (VI_1) removing VIPs. # ip addr 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 02:00:0a:ba:3f:70 brd ff:ff:ff:ff:ff:ff inet 10.186.63.112/24 brd 10.186.63.255 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::aff:feba:3f70/64 scope link valid_lft forever preferred_lft forever |

可以看到node02上keepalive已经移除了VIP,重新变为BACKUP状态。

以上过程,表明VIP漂移过程已实现。

附录

高可用集群单节点配置文件

测试环境配置文件模板如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 |

dbms.directories.import=import true dbms.connector.bolt.listen_address=10.186.63.112:7687 true dbms.connector.http.listen_address=10.186.63.112:7474 true dbms.jvm.additional=-XX:+UseG1GC dbms.jvm.additional=-XX:-OmitStackTraceInFastThrow dbms.jvm.additional=-XX:+AlwaysPreTouch dbms.jvm.additional=-XX:+UnlockExperimentalVMOptions dbms.jvm.additional=-XX:+TrustFinalNonStaticFields dbms.jvm.additional=-XX:+DisableExplicitGC dbms.jvm.additional=-Djdk.tls.ephemeralDHKeySize=2048 true dbms.windows_service_name=neo4j dbms.jvm.additional=-Dunsupported.dbms.udc.source=tarball dbms.connectors.default_listen_address=10.186.63.112 dbms.connectors.default_advertised_address=10.186.63.112 dbms.mode=HA ha.server_id=112 ha.initial_hosts=10.186.63.108:5001,10.186.63.112:5001,10.186.63.114:5001 ha.host.data=10.186.63.112:6001 ha.join_timeout=30 dbms.security.procedures.unrestricted=algo.*,apoc.* dbms.jvm.additional=-Dcom.sun.management.jmxremote.port=3637 #备份配置 true dbms.backup.address=10.186.63.112:6362 #事务日志配置 dbms.tx_log.rotation.retention_policy=5G size dbms.checkpoint.interval.time=3600s dbms.checkpoint.interval.tx=1 dbms.tx_log.rotation.size=250M #ldap配置 true dbms.security.auth_provider=ldap dbms.security.ldap.host=ldap://10.186.61.39:389 dbms.security.ldap.authentication.mechanism=simple dbms.security.ldap.authentication.user_dn_template=uid={0},ou=users,dc=actionsky,dc=com false true dbms.security.ldap.authorization.system_username=cn=manager,dc=actionsky,dc=com dbms.security.ldap.authorization.system_password=123456 dbms.security.ldap.authorization.user_search_base=ou=users,dc=actionsky,dc=com dbms.security.ldap.authorization.user_search_filter=(&(objectClass=*)(uid={0})) dbms.security.ldap.authorization.group_membership_attributes=memberOf dbms.security.ldap.authorization.group_to_role_mapping=\ = reader; \ = editor; \ = publisher; \ = architect; \ = admin |